Walking the Planck

Variable Constants & False Foundations

“Science progresses one funeral at a time.” —Max Planck (deceased)

Planck’s “constant,” a cornerstone of the reductionist science of quantum mechanics, was born out of a crisis in physics known as the Ultraviolet Catastrophe. Scientists, following Kirchhoff’s Law of Thermal Radiation (~1860), predicted from their equations that a black body — an idealized object that absorbs and emits all frequencies of radiation — would emit infinite energy at high frequencies — short wavelengths — which obviously contradicts physical observations.

Max Planck resolved this paradox in 1900 by introducing the concept of quantized energy levels. He proposed that energy is not emitted or absorbed continuously, but in discrete packets or “quanta” of energy. Planck’s introduction of quantized energy levels was initially considered a mathematical trick to fit experimental data. However, it fundamentally challenged the classical view of energy as a continuous variable, marking the birth of quantum physics.

In standard theory, Planck’s constant, denoted as h, is the key to the quantum world, ostensibly linking the energy of a photon to its frequency. h is a weeny little number, far beyond any human ability to sense its reality, yet it is a potent artifice, allowing the calculation of the energy of light particles and the discernment of the behavior of atoms and molecules under certain experimental conditions. It is a quantifier of action or energy related to processes or interactions, not a physical entity or particle.

But is it constant as assumed?

Sheldrake and the inconstant “constants”

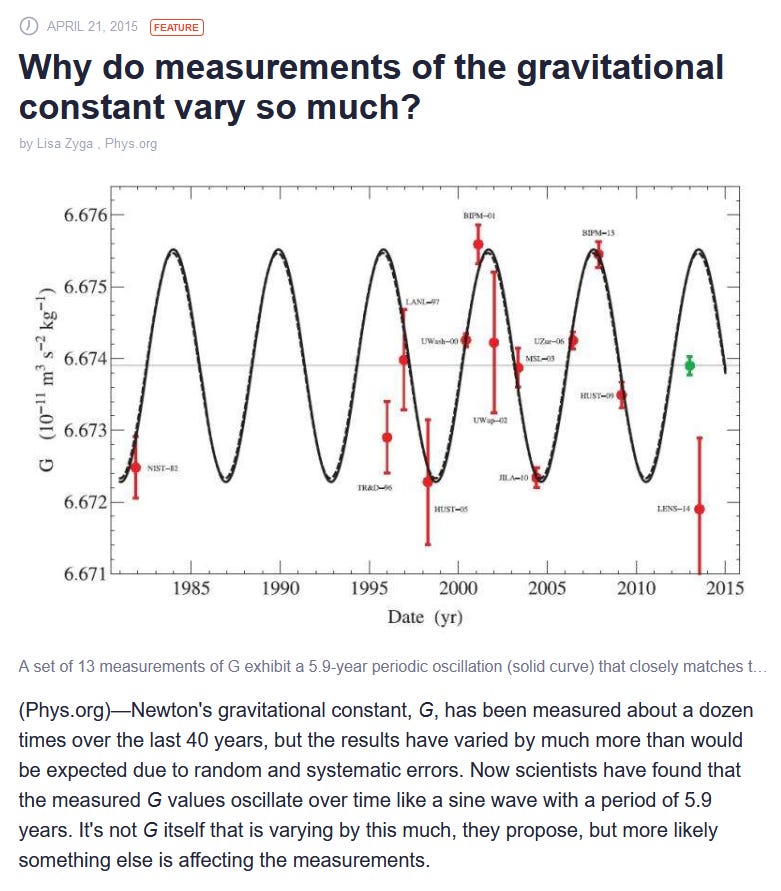

Rupert Sheldrake's "Science Delusion" research shakes the foundations of physics, questioning the constancy of the Universal Gravitational Constant, the Fine Structure Constant, and the Speed of Light. It's like finding out the rules of the game have been changing all along.

Rupert Sheldrake has brought into question whether or not the fundamental constants such as the speed of light “C” or the gravitational constant “G” are truly constant.

As Rupert discovered in reference to C and G, the values are fixed by a standards committee. In the case of G, it is an average of all the various measurements from around the world over time. In the case of C, not only was the value fixed in 1972, but it is used to define the meter. So if there is a change you can’t tell as the units change together.

I met Rupert at a conference a few years ago, and queried if he looked at Planck’s constant in his investigations.

He said that the work he did on C, G and the Fine Structure Constant took quite a bit of time and effort, other projects prevented him from pursuing further. He said that if I looked into Planck’s constant I would expectedly find the same story. And I did.

The Planck is fixed officially, even though variations are regularly measured in labs.

The current official value of Planck's constant h was fixed in 2019 when the kilogram was redefined in terms of Planck's constant by the General Conference on Weights and Measures. This fixed value is now used in the International System of Units (SI).

But it gets worse for the Planck.

Challenging Kirchhoff and Planck Dogmas

Pierre-Marie Robitaille, a vocal critic of Kirchhoff’s law, challenges the universality of Planck’s constant by presenting experimental evidence that black body radiation depends on the material of the cavity. This suggests that h is probably not a universal constant. Robitaille’s work spans from the intricacies of MRI technology to fundamental questions in thermodynamics, particularly questioning the validity of Kirchhoff’s Law and Planck’s universality. His observations in MRI technology, where resonant cavities do not behave as perfect blackbodies, led him to propose that the assumptions underlying Planck’s constant and Kirchhoff’s Law might be more mathematical convenience than physical reality.

The implications of Robitaille’s challenge are seismic. If the tenets of thermal radiation are not the universal constants so presumed, the implications for the standard model of the cosmos are staggering, from the smallest atoms to the vast spread of galaxies. Astrophysics relies heavily on these laws to interpret cosmic light and energy, thereby needs to reconsider its most fundamental concepts, like the nuclear gaseous Sun, the Cosmic Microwave Background (and thereby the Big Bang), and that great god of scientismic worship itself, the Black Hole. In other words, everything theoretical physics believes in, because it has become more religious dogma than science based on experiment.

In the paper “Kirchhoff’s Law of Thermal Emission: What happens when a law of physics fails an experimental test?” Robitaille along with Joseph Luc Robitaille present their evidence proving why Kirchhoff’s Law is not universally valid. Their experiments show that the nature of the cavity walls does affect the radiation within, contradicting Kirchhoff's Law. They critique Max Planck’s proof of Kirchhoff’s Law, highlighting errors and assumptions that they show are incorrect. They conclude that Kirchhoff’s Law is false and that concepts derived from this law, such as Planck time and Planck temperature, lack fundamental meaning in physics. The failure of Kirchhoff’s Law in experimental tests leads to a better understanding of material emissive properties and the realization that the production of a thermal spectrum is dependent on the presence of a vibrational lattice.

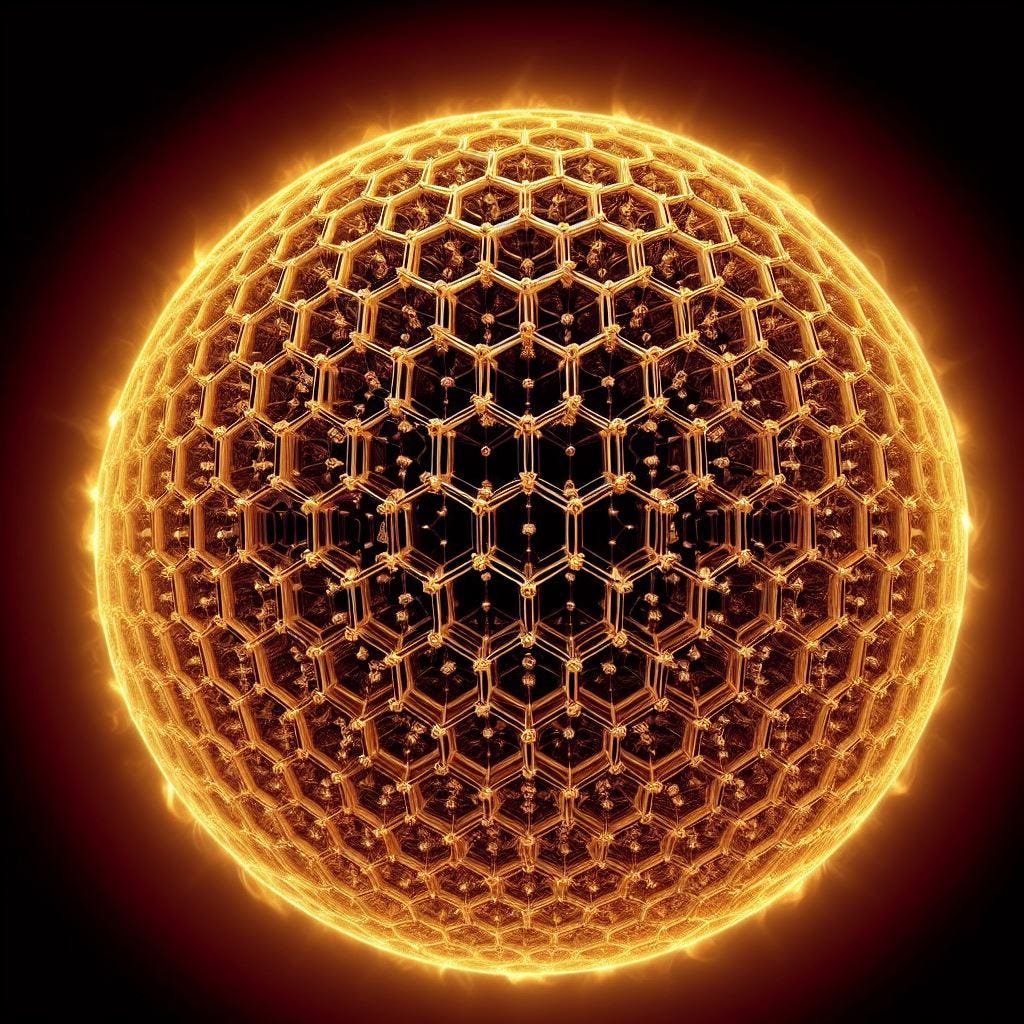

Robitaille’s The Liquid Metallic Hydrogen Model of the Sun and the Solar Atmosphere series of papers challenges conventional gaseous models of the Sun with a condensed matter perspective. He argues that the continuous spectrum observed in the solar photosphere and chromosphere cannot be adequately explained by gaseous models. Instead, he proposes that the Sun is comprised of condensed matter, with the photosphere containing liquid metallic hydrogen. This model suggests that both gaseous and condensed forms of matter exist above the photosphere, similar to Earth's atmosphere. Robitaille’s critique extends to foundational aspects of solar physics, advocating for a reevaluation of the Sun's structure and composition.

If you doubt Robitaille’s science, first question your beliefs in modern theory, then check out his work based on experimental data. His Sky Scholar YouTube channel is a gold mine of technical knowledge expertly explained in detail.

Planck’s Variable “Constant”

Richard Hutchin throws a curveball into this mix, suggesting that Planck’s constant might change with Earth's orbital position. His experiments with tunnel diodes show annual variations in voltage that hint at a Planck’s constant that wobbles as Earth moves around the sun.

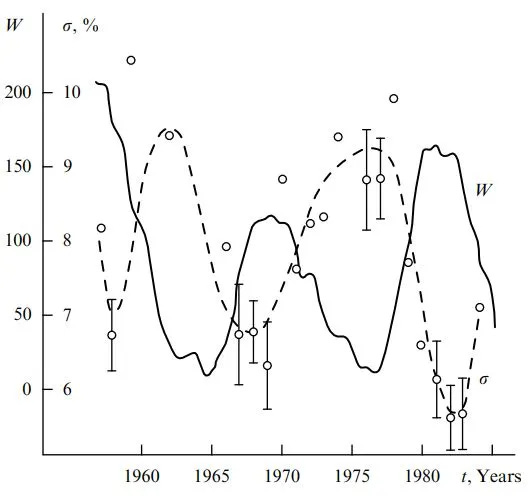

Richard Hutchin’s paper, Experimental Indication of a Variable Planck’s Constant, presents evidence suggesting that the allegedly-immutable h exhibits variability under certain conditions. The core argument of the paper is based on observed annual variations in the decay rates of eight radionuclides over a 20-year span by six organizations across three continents. These variations, observed in both beta decay (weak interaction) and alpha decay (strong interaction), led Hutchin to hypothesize that small variations in Planck’s constant might account for the synchronized variations in decay rates.

To test this hypothesis, Hutchin conducted a purely electromagnetic experiment with tunnel diodes, which recorded annual synchronized variations of 826 ppm (peak-to-in strong and weak decays. Consequently, h would reach maxima around January–February and hit minima around July–August. This pattern aligns with the Earth’s changing orbital position, suggesting a potential gradient in Planck's constant across the Earth's orbit of approximately 21ppm. Noteworthy is that the electrical phenomenon with diodes lagged the expected timing by ~1 month, implying opposite phases for electronic versus nuclear systems!

Hutchin theorizes that Planck’s constant may not be universal but rather h is an environmental variable that can vary in space and time, and perhaps by frequency!

Cosmic Rhythms of Apparent Randomness

The Shnoll Effect links the variability of Planck’s constant to solar cycles and activity. It's like the sun has a remote control for radioactive decay rates, turning them up or down with its cosmic rhythms.

When the pursuit of knowing the universe’s most intricate details leads to more questions than answers, the work of Simon Shnoll is of great significance. Through meticulous experimentation and observation, Shnoll and his colleagues have uncovered a phenomenon that challenges our fundamental understanding of randomness: the Shnoll Effect.

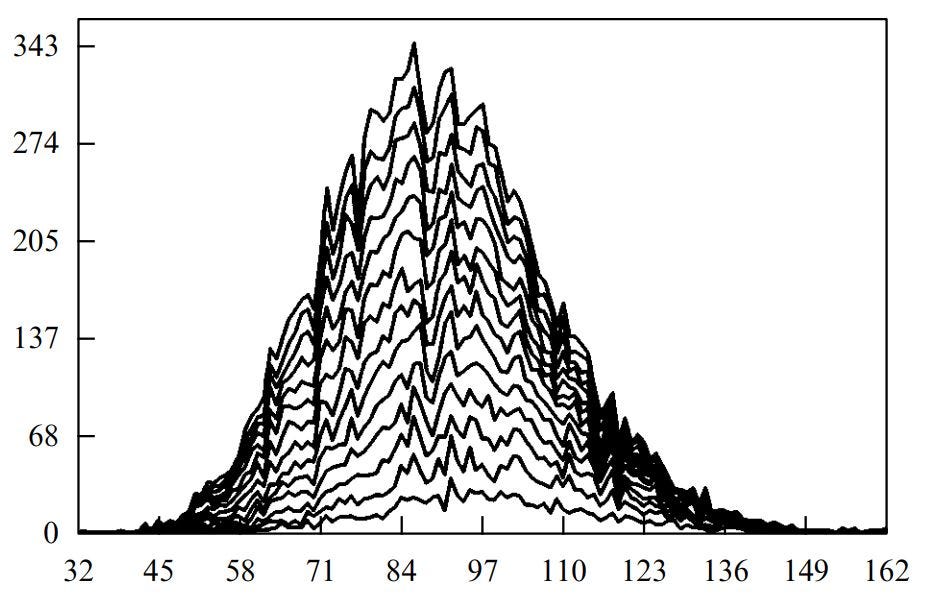

At its core, the Shnoll Effect reveals that the histograms of results from various random processes—such as the decay of radioactive elements, chemical reaction rates, and even fluctuations in financial markets—do not display the expected smooth, Gaussian distribution. Instead, these histograms exhibit a fine structure, a pattern of peaks and valleys that changes in a regular manner over time. This pattern is not confined to a single location; similar structures appear in measurements taken simultaneously at different places, provided they are aligned with the same local time.

One of the most striking demonstrations of the Shnoll Effect involves the use of collimators to direct beams of alpha particles from radioactive decay in specific directions. Shnoll’s experiments showed that the histograms of alpha decay rates exhibit a peculiar “palindrome phenomenon.” Depending on the direction the collimator faces (East or West), the sequence of histograms during the day mirrors the sequence from the preceding or following night, respectively. This finding suggests a profound connection between the observed patterns and the Earth’s rotation and its motion through space!

The implications of the Shnoll Effect extend beyond the mere observation of patterns in randomness. It hints at an underlying cosmic influence on what we perceive as stochastic (random probability distribution) processes. Earth’s movement through the inhomogeneous continuum of space, influenced by cosmophysical factors such as electrical, magnetic and gravitational fields, appears to modulate these random processes in a predictable manner. The daily, monthly, and yearly cycles of histogram shapes, as well as their dependence on the spatial orientation of measurements, underscore a cosmic rhythm to which even the most apparently random events dance.

This brings us to the intriguing intersection of the Shnoll Effect with research suggesting the variability of fundamental constants, such as Planck’s constant. If the fabric of space and time, influenced by the Earth’s motion and the positions of celestial bodies, can affect the fine structure of random processes, could it also modulate the values of constants that underpin the laws of physics? The work of researchers like Hutchin, positing a variable Planck’s constant, resonates with Shnoll’s findings. Both lines of inquiry challenge the static nature of physical laws and constants, bringing into focus a dynamic universe where even the most fundamental parameters are subject to cosmic rhythms.

In essence, the Shnoll Effect opens a window to a universe where the boundaries between randomness and order, between the microcosm of atomic processes and the macrocosm of celestial movements, are blurred.

Cosmic influences on radioactive decay rates

The relationship between radioactive decay rates and solar activity, including solar flares, solar rotation, and Earth’s orbital position has been a subject of scientific curiosity and investigation. This exploration has led to the discovery of potential variations in decay rates that could have profound implications for fields ranging from medicine and anthropology to the prediction of solar flares.

While not directly addressing the Shnoll effect, multiple studies over the past 20 years have detected annual oscillations in the decay rates of various radioactive isotopes. The decay rates fluctuate on a yearly cycle, peaking around January and reaching a minimum around July or August. The relative amplitude of the variations ranges from 0.1% to over 3%. The timings on these imply the same data or related utilized in Hutchin’s work.

These periodic fluctuations suggest radioactive decay may be influenced by annual changes related to the Earth’s orbit around the Sun. One hypothesis proposes that the annual variation in Earth's distance from the Sun leads to changes in the solar neutrino flux that could impact decay rates. However, the phase and amplitude of the decay fluctuations do not perfectly match models based solely on Earth-Sun distance‽

In addition to solar neutrinos, other proposed explanations for the annual decay rate variations include interactions with solar wind particles, annual temperature variations, or unknown geophysical effects. However, no mechanisms can yet fully account for the observed amplitude and phase of the oscillations across different isotopes.

The potential relationship between radioactive decay rates and solar activity, along with the implications of the Shnoll effect and the variability of Planck’s constant, presents an intriguing area of scientific inquiry. This not only challenges traditional understanding of physical constants, which require revision anyway to overcome the inherent philosophical reductionism blinding the paradigm-bound, but also provides us pause to consider the potential relationship to works such as that of L. Kolisko in her metal-planet crystallization experiments.

Planck’s Constant and the Nature of Light

Lori Gardi steps up with a bold thesis: Planck's constant is all about the energy of a single wave oscillation, and it's the same for all frequencies. She says you can calculate it just by looking at the time it takes for one cycle, challenging the idea that energy depends on frequency.

Lori Gardi, in her paper Planck’s Constant and the Nature of Light, proposes a reinterpretation of Planck’s constant that challenges the traditional understanding of this fundamental “constant.” According to Gardi’s Modified Unit Analysis (MUA), Planck’s constant ℎ, is not the quantum of action as it is commonly understood, but rather the energy of one oscillation of an electromagnetic wave, regardless of the frequency!

According to Gardi, the numerical value of Planck’s constant h represents the energy per cycle of an electromagnetic wave, suggesting that each cycle of a wave carries a constant quantum of energy, which is the same for all frequencies. This interpretation diverges significantly from the standard view by proposing that the energy of light is not variable with frequency but is a fixed quantum of energy per cycle.

Gardi’s reinterpretation suggests a fundamental shift in understanding Planck’s constant, implying that the value of h, as the energy of a single oscillation, can be calculated from the dimension of time, specifically from the time of the oscillation. This contrasts with the standard interpretation where h is a fixed constant used to proportion the energy of a photon to its frequency. Even though h remains a constant in her model, what is constant is that the time reference is the wavelength, which of course changes with frequency.

Therefore, in both the standard interpretation and Gardi’s thesis, h is understood as a quantifier of action or energy related to processes or interactions, not as a physical entity or particle.

In this video, Gardi analyses some of the work of Tesla maestro Eric Dollard, using her thesis as a basis. Starting around 10 minutes, she seems to be saying that Ψ (dielectric flux) times Φ (magnetic flux) = h. I’m still processing all this. For those interested to pursue in depth:

Planky Doodle Dandy

Naturally, all these ideas are in contention in the scientific world, as they challenge the hard dogmas of orthodoxy. Yet that doesn’t prevent open-minded researchers from observing and following the data to new vistas of discovery. As followers of my work will already know, chemical reactions (Piccardi), metallic salt crystallizations (Kolisko), botanical form and rhythms (cosmological botany), and ‘viral’ disease waves (heliobiology) are all influenced by cosmic positions and cycles. There is a far greater picture here.

It is just in the thralldom of the subsensible realm that the modern mind can only see beneath matter for answers, and will miss the irreducible wholeness of Creation. Yet even when we look down there we find that the cosmos influences the most minute activities, revealing that the so-called constants are not constant at all, but rather cosmos-affected variables. The best answers will always be gifts from the supersensible formative cosmic intelligences, when we train our cognitive abilities to participate in their activities (Goethe, Steiner).

For the general believer in orthodox science, these ideas should lead to a new way of thinking about the so-called constants that underpin the current paradigm of physics, requiring adjustments to theories and models that currently assume these constants are immutable.

Those of us domiciled in the Borderlands of Science have long known the physics textbooks require metamorphic revision. Rather than looking to subsensible variable “constant” foundations to build upon, how about cognizing from the top down, with life, levity and consciousness as essential components?

I regard consciousness as fundamental. I regard matter as derivative from consciousness. We cannot get behind consciousness. Everything that we talk about, everything that we regard as existing, postulates consciousness. —Max Planck

Thank you for reading!

Your continued support means the world to me.

Your contributions help me create and share more content like this, allowing me to spend more time on research and invest in better equipment.

Would you consider becoming a paid subscriber or making a one-time donation to support my work?

You can contribute through:

Sharing this content with your friends and family.

Any amount, big or small, is greatly appreciated. Thank you for your generosity!

Chronicles of Alkemix is a reader-supported publication. To receive new posts and support my work, please consider becoming a paid subscriber.

Bravo!

You've been busy... So many tangents to follow!

I love the model of the Sun as hexagonal plates of hydrogen. I think I stumbled on about the time I was researching graphene, which is a form of carbon that layers itself in single atomic hexagonal plates, and I found out that Exclusion Zone water, from Gerald Pollock, was hexagonal plates of water aligning themselves into parallel layers. Evidence that the phenomenological world has repeating patterns that work to create the reality experience every day!